- OneStream Community

- Forums

- Workflow and Data Integration

- Re: Exporting Data automatically to outside of OneStream

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-22-2021

12:19 PM

- last edited on

07-31-2023

08:29 AM

by

![]() JackLacava

JackLacava

HI

I am trying to automate a data extract process to send data from OneStream to another system eg Anaplan. I have a data Mgmt job to Export the data to a CSV on the file share. I now want to move that CSV file from the file share to say my desktop for example (or any other file storage place). Has anyone done any routine like that? If so is there any BR in OneStream that can be used for that. I havent done this before but sure others may have come across this so just wondered what the best approach would be.

Thanks!

Tahir

Solved! Go to Solution.

- Labels:

-

Data Management

-

FileSystem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-19-2022 06:28 AM

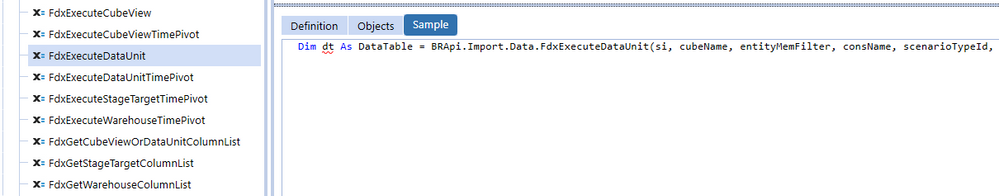

If you are not afraid of Business rules, you should try to use the Fdx functions within BRAPI, they are creating a datatable object and they are retrieving the data very fast, they are all starting with

BRApi.Import.Data.Fdx<Something>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-23-2021 01:10 PM

Are you on prem or in the cloud?

We are cloud for OneStream and need many different applications to consume the OneStream data. I set up automation to export to SQL Server so that we don't have to deal with the hassles of files. If you don't have an external SQLconnection, one would need to be set up. Next create a business rule with a query to get the data you want and write it to the SQL table. Set up a Data management step and sequence. Finally, create a OneStream Scheduled task to run the data management sequence for the daily/weekly/monthly schedule you want the process to run.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2022 07:20 AM

HI Scott

Many thanks for replying! We are on the Cloud. We have a connection to SQL for my Bi Blend job which spits out data to a View table.

One question - what do you use the DM for in this process?

Thanks

Tahir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2022 07:59 AM

The data management sequence is needed in order to use the task scheduler to run the export each day/week/month. The DM sequence calls a DM step which runs a business rule. the business rule contains the logic to pull the data out of the cubes/BIBlend and replicate the data out of onestream's database into our internal database server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2022 09:00 AM

Thanks that makes sense! Will have a play with the above ideas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2022 12:08 AM

Hi Scott!

Which tables in Onestream store the parent-child members? I saw the dimension table as well as member table but cannot find the one that has the parent-child relationships

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2022 07:49 AM

Relationship table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-03-2022 11:56 AM

scottr's approach is the way to go. Exporting base level data to a file and then pushing it into an external SQL database is very fast. The only down side of this approach is that exporting parent members can really put a damper on performance. To avoid this, we developed a two step process:

- Export the parent child relationships for all the dimensions into external SQL tables

- Export base level data from the cube to an external table

This method is much faster than exporting all data out of OneStream but puts the burden of doing the aggregation math on the external SQL table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-13-2022 07:22 AM

HI Gidon

Thanks for replying - that sounds like a good approach will have a tinker!

Thanks!

Tahir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2022 12:06 AM

Hi Gidon!

We just set up our rest API and need to extract parent child members of the account hierarchy. Not sure where to start though can you point me to any documentation that has helped you export metadata to external SQL dbs? Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2022 09:49 AM

We use a data adapter to query the Relationship table so that we can see exactly what the relationships look like. Then we reference the that data adapter to get the SQL statement into the EBR.

This is the query that pulls the relationships ("ADU_Dim_Metadata":

WITH Rec

AS (SELECT Member.MemberID, Name, Description, 0 as ParentID, 1 as Level, DimTypeID, CAST(0 as DECIMAL) as Agg

FROM Member

WHERE Member.MemberID = (select MemberID from Member where Name = '|!ADU_Param_Select_Top_Member!|' )

UNION ALL

SELECT m.MemberID, m.Name, m.Description, this.ParentID, parent.Level + 1, m.DimTypeID, CAST (UDAggWeight AS DECIMAL) as Agg

FROM Rec parent

INNER JOIN Relationship this ON this.ParentID = parent.MemberID

INNER JOIN Member m ON this.ChildID = m.MemberID

)

SELECT distinct

'' as RowID,

'|!ADU_Param_Select_Dim_Name!|' as Dim_Name,

'|!ADU_Param_Select_Dim_Type!|' as Dim_Type,

Rec.MemberID, Name as Member_Name, Description as Member_Description, ParentID,

(select Name from Member where MemberID=ParentID) as Parent_Name,

'0' as Level,

CASE

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =0 THEN 'Group'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =1 THEN 'Revenu'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =2 THEN 'Expense'

WHEN Rec.DimTypeID = 5AND mp.DecimalValue =3 THEN 'Asset'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =4 THEN 'Liability'

WHEN Rec.DimTypeID = 5AND mp.DecimalValue =5 THEN 'Flow'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =6 THEN 'Balance'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =7 THEN 'BalanceReccuring'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =8 THEN 'NonFinancial'

WHEN Rec.DimTypeID = 5 AND mp.DecimalValue =9 THEN 'DynamicCalc'

WHEN Rec.DimTypeID <> 5 THEN 'N/A'

ELSE 'Other'

END as Acct_Type,

Agg,

CURRENT_TIMESTAMP as Update_Time_Stamp

FROM REC

LEFT JOIN (SELECT * FROM MemberProperty WHERE PropertyID = 100) mp on Rec.MemberID = mp.MemberID

--order by Level;

This is the EBR that gets the SQL query from the data adapter, replaces the parameters, and executes the query to push the data out to an external database:

#Region "Metadata Transfer"

Private Function MetaData_Transfer (ByVal si As SessionInfo, dimName As String, dimType As String, topMember As String, StageTableName As String) As Boolean

' system.Threading.Thread.Sleep(10000)

Dim AdapterName As String = "ADU_Dim_Metadata"

'Get the sql from the data adapter

Dim AdapterContents As String = BRApi.Database.LookupRowFieldValue(si, "App", "DashboardAdapter", "Name='" & AdapterName & "'", "SQLQuery", "")

'send the Adapter Contents to the log

brapi.ErrorLog.LogMessage(si,"AdapterContents before changes: " & AdapterContents.ToString)

'Change Top Member Filter

AdapterContents=StringHelper.ReplaceString(AdapterContents,"|!ADU_Param_Select_Top_Member!|", topMember, True)

'Change Dim Name Filter

AdapterContents=StringHelper.ReplaceString(AdapterContents,"|!ADU_Param_Select_Dim_Name!|", dimName, True)

'Change DIm Type Filter

AdapterContents=StringHelper.ReplaceString(AdapterContents,"|!ADU_Param_Select_Dim_Type!|", dimType, True)

'Send new Adapter Contents string to the log

brapi.ErrorLog.LogMessage(si,"AdapterContents: " & AdapterContents.ToString)

'Get metadata into a table

Dim objDbConnInfoApp As DbConnInfoApp = BRApi.Database.CreateApplicationDbConnInfo(si)

Dim dt As DataTable = BRApi.Database.ExecuteSql(objDbConnInfoApp, AdapterContents, False)

BRApi.Database.SaveCustomDataTable(si, externalConnector, StageTableName, dt, True)

Return True

End Function

#End Region

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2022 09:44 PM

Thank you so much!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-19-2022 06:28 AM

If you are not afraid of Business rules, you should try to use the Fdx functions within BRAPI, they are creating a datatable object and they are retrieving the data very fast, they are all starting with

BRApi.Import.Data.Fdx<Something>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-19-2022 06:45 AM

Hi,

Many thanks for the suggestions will have look into that!

Thanks

Tahir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2022 09:14 AM

Hey Christian, how does the filter parameter for the FdxExecuteDataUnit method work? It's a string and I assumed it would be similar to the Data Management extract filter (i.e. something like "A#All:U1#Top:U2#Top" etc) but that's not working. It doesn't like the syntax of having the # in the string.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-05-2022 06:30 AM

Hi Mike

Sorry for the late answer. The filter works like in an sql query's where statement: "Account = 'Test' or Flow='None'"

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2022 10:37 PM

You can extract the data through DM Base level or Consolidated Level based on POV and you can read the each row and either you can use RESTAPI to send it to the Target System. Yo have to pass the CSV output to JSON body so it can import into target system and another cool thing is you can also export to datatables.

Krishna

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-14-2022 08:24 AM

You can do this multiple ways.

1. Export the DM Job to csv to an external storage like AWS or Azure Storage using a Extended BR.

2. Export the DM job to Data Table and use the RESTAPI from of your Target (ANAPLAN) to call directly from OS Business Extended BR.

3. It can be exported to Database As well.

Krishna

- File Explorer Incoming workflow folders missing in copied applications in Workflow and Data Integration

- Exporting data to a Flat File in the OneStream File System using C# in Workflow and Data Integration

- Best Approach to Exporting Dashboards Outside of OneStream? in Reporting

- Export a report to Excel Error - Unable to open file in Reporting

- Extended Analytics export in Workflow and Data Integration