- OneStream Community

- Forums

- Reporting

- What's the best way to pull 16M+ rows of data

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What's the best way to pull 16M+ rows of data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2023 03:06 PM

Hello!

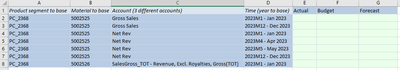

I have a user group who needs a specific set of data pulled just after close every month in order to populate other reporting tools they use. Here's the formatting they require:

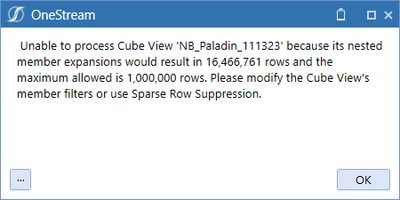

I'm struggling on my options because this results in about 16M+ rows of data. I get an error when trying to export the CV and a QV won't show all rows even with the max # of seconds set to the largest number (which I believe is 600). A DM extract will not show the right format but I don't think a QV can be auto run and sent via Parcel Service. What are my other options?

Thanks for helping me brainstorm!

- Labels:

-

Cube View

-

Excel Add In

-

Reports

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2023 04:00 PM

You can use this function "BRApi.Import.Data.FdxExecuteCubeView(si, cubeViewToExtract, entityDimName, entityMemFilter, scenarioDimName, scenarioMemFilter, timeMemFilter, nameValuePairs, includeCellText, useStandardFields, filter, parallelQueryCount, logStats)" to retrieve the data into a DataTable. Then, using a StreamWriter to a CSV.

Hope it helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-14-2023 04:06 PM

Thanks - is there more instruction somewhere that you know of which I could review to see if this will allow the flexibility I need?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-15-2023 11:08 AM - edited 11-15-2023 11:54 AM

It's part of the so-called FDX (Fast Data eXtract) APIs. If you search around the forum you should find quite a few examples. We also cover them in the BiBlend class, so if you know anyone who attended that you can ask him for the material. They are in the latest docs at https://documentation.onestream.com - they show them applied to Data Source Connectors, but will work as well in Dashboard Data Set rules or any other type of rule.

Basically, they allow fast extraction of Cube Views, Data Unit records, or Stage records, and also allow to pivot them on the Time dimension if necessary (i.e. getting a column for January, one for February, etc, which can compress the number of records pretty dramatically). I don't think flexibility will be a problem, but they do require a bit of coding to set up all the parameters they require.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2023 05:21 PM

Thanks so much for the direction! I've set up the BR and the data adapter and when I test, I get the same error I received when I built and attempted to export the initial CV:

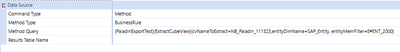

What am I doing wrong? Here's the code (which I shamelessly stole from this post Solved: FDX Cube View Rule - OneStream Community (onestreamsoftware.com)):

And my data adapter set up:

Any direction would be very much appreciated!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-16-2023 11:49 PM

Some thoughts:

1. FdxExecuteCubeViewTimePivot might get you closer with periods in your columns but 16mil/12 is still more than 1mil so probably not helpful

2. Have you tried the other FDX options that don't rely on a cube view? E.g., BRapi.Import.Data.FDXExecuteDataUnit or BRapi.Import.Data.FDXExecuteDataUnitTimePivot? Not sure what their limits are.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-17-2023 09:15 AM

Hi!

Thanks - I don't want periods in the columns though so that wouldn't be an option anyway.

I haven't but that was my next step! Thanks 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-22-2023 05:19 PM

Well I wouldn't forget that you're just trying to get the data out here. You can always sort out the format/structure of it at a later stage.

I guess another option is splitting your extract by one of your dimensions and then putting it back together after (or letting the user do it).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-27-2023 09:00 AM

I'm trying to solve without manual user manipulation, not just trying to get the data out. I can do a flat file DM job if I wasn't looking for a one button solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-27-2023 05:12 PM

Yes but my point is your flat file doesn't need to be the end of your process. So if you hit a brick wall with other extraction options you have the option to use one of the imperfect options and manipulate your extracted data either in OS, the target system(s), or somewhere in between.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-04-2023 10:02 AM

Understood

- Data Cell Access Security (Slice Security) Not Working With Multiple UD Dimensions in Application Build

- Custom table limitation in Workflow and Data Integration

- How can I ( if possible ) set a UD static value from a data source based on contents of a column in Workflow and Data Integration

- SQL Table Insert Not adding all Records in Rules

- QuickViews - Message - "Page 1. Use row paging buttons in Task Pane" in Reporting